Hot loading of C++ code during run-time has become a popular technique among game programmers. The motivation for this type of techniques is to shorten the code-compile-verify iteration. If this cycle starts taking too long, all kinds of negative effects can set in (frustration, boredom, disengagement) and productivity goes down rapidly.

With any sufficiently large code base, there are two main contributing factors to the unproductive portion of this cycle:

- Compilation time – even a small change in code may trigger lengthy recompilation.

- Program state – loading data and calculating complex program state can take a long time. It can be wasteful, having to exit the program, throw away its state, start fresh and try to replicate the previous state, only because of a tweak in code.

Traditionally, the go-to solution has been to integrate a scripting language. Without going into much detail, suffice to say this brings a host of new problems: adding a large-ish software dependency, need to write or generate interop code, incompatibilities between the native and scripting language concepts, performance, etc. While traditional scripting is certainly a good choice in many cases, alternatives have popped up, such as visual node graph editors (think Unreal Blueprints).

The idea of using C++ as both native language and scripting language is not new and its advantages have been discussed plenty. Some interesting projects (that I know of) approach this quite differently:

- Cling – interactive C++ interpreter and REPL built on LLVM and Clang. Cling can be used either standalone or embedded in a host program.

- Live++ – run-time hot reloading solution. It can recompile a program or library and hot-patch it in memory while it’s running.

In contrast with those sophisticated tools, there is another simple but effective solution: compile a C++ script into a shared object (DLL) then load it in the host process and execute. This approach has been explored many times [1, 2, 3, 4, and others]. However, I think those attempts were sometimes over engineered. Their authors created small frameworks with lots of features and restrictions, and sometimes tried to force C++ to be something which it’s not — a real scripting language. But we only use it here as if it was a scripting language. I thought I could do with something much simpler to fulfill my requirement:

While not having to exit my program and throw away its state, I want to compile and run some additional C++ code and allow it to fiddle with my program’s state.

Conceptually and practically, the code to achieve this does not have to be complicated. Here is a fully functional minimal version of this technique in C:

void rt_execute(void *context) {

system("g++ -shared -o script.so script.cpp");

void *h = dlopen("./script.so", RTLD_LAZY);

void (*entry_fn)(void*) = dlsym(h, "entry_fn");

entry_fn(context);

// perhaps later: dlclose(h);

}

Any features on top of that (save for error checking, obviously) would be just added convenience. For example, we could implement a build environment and compiler switches manager. Or watch for changes in the script file and automatically recompile and reload. I think, there is especially no need for any complex data exchange mechanism — something that was explored quite diligently by previous attempts. Let’s just put any shared data structures into a common header and simply include it from the script. Then simply pass a pointer to this structure from the host application to the script. What is behind this pointer, is entirely up to the user. It can be nothing, or it can be a whole game engine with many complex subsystems and interfaces.

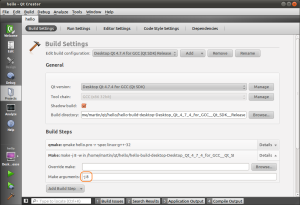

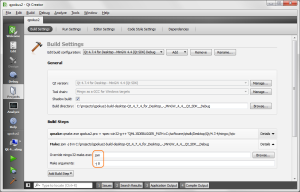

So I made my own Run-time Compiled C++ library, with minimal features and maximum flexibility. It’s available on GitHub: https://github.com/martinky/qt-interactive-coding. The library is made using Qt. Why Qt? Because Qt is my go-to framework when I need C++ with batteries. I’ll be using the library in my other Qt projects. And last but not least: Qt comes with its own cross-platform build system – qmake. By taking advantage of qmake, the library automatically works on multiple platforms, as I don’t have to deal with various compilers directly.

With this simple approach, there aren’t really any restrictions on what can or cannot go into a C++ script. However, we have to keep in mind that the script code is loaded and dynamically linked at run-time and there are some natural consequences of this. These are familiar to anyone who has made a plugin system before:

- We are loading and executing unsafe, untested, native code. There are a million ways how to shoot yourself in the foot with this. Let’s just accept that we can bring the host program down any time. This technique is intended for development only. It should not be used in production or situations where you can’t afford to lose data.

- Make sure that both the host program and the script code are compiled in a binary compatible manner: using the same toolchain, same build options, and if they share any libraries, be sure that both link the same version of those libraries. Failing to do so is an invitation to undefined behavior and crashes.

- You need to be aware of object lifetime and ownership when sharing data between the host program and a script. At some point, the library that contains script code will be unloaded – its code and data unmapped from the host process address space. If the host program accesses this data or code after it has been unloaded, it will result in a segfault. Typically, a strange crash just before the program exits is indicative of an object lifetime issue.